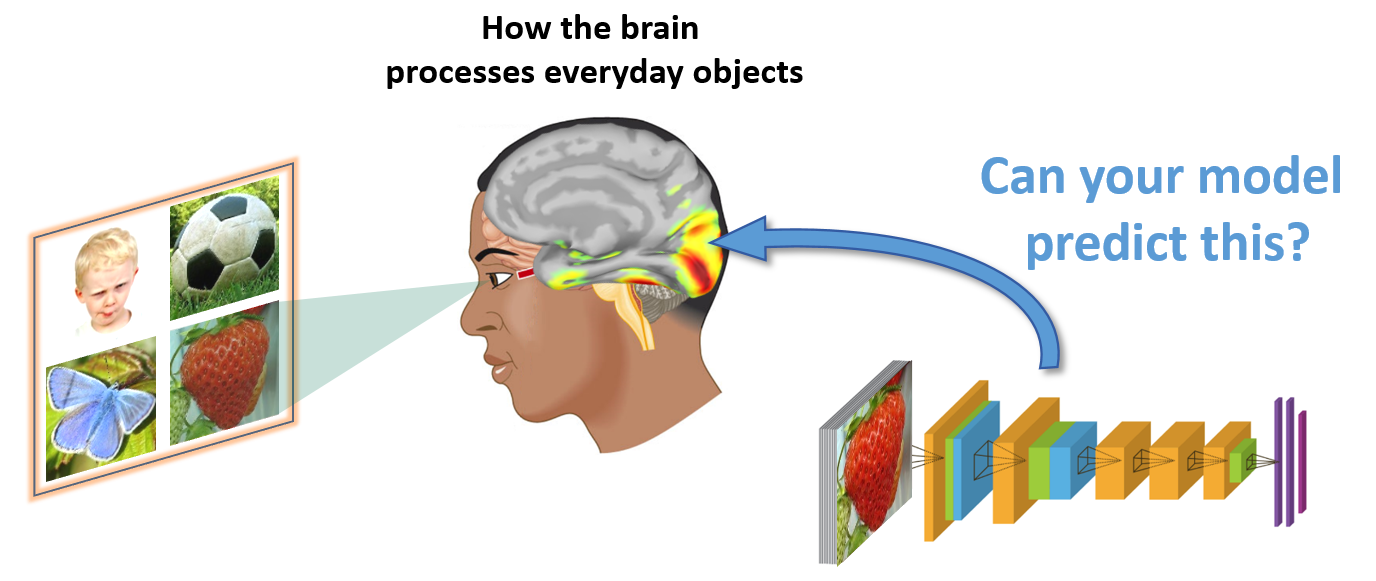

Understanding how the human brain works is one of the greatest challenges that science faces today. The Algonauts challenge proposes an open and quantified test of computational models on human brain data, including both spatial (i.e. fMRI) and temporal (i.e. MEG/EEG) measurements. This will allow us to assess the real progress of the field of cognitive computational neuroscience (see also Brain-Score and the Neural Prediction Challenge).

The primary target of the 2019 challenge is the visual brain: the part of the brain that is responsible for seeing. Currently, particular deep neural networks trained on object recognition (Yamins et al. 2014, Khaligh-Razavi & Kriegeskorte 2014, Cichy et al. 2016 etc.) do best in explaining brain activity. Can your model do better?

The main goal of the 2019 Algonauts challenge is to predict brain activity from two sources—fMRI data from two brain regions (Track 1), or MEG data from two temporal windows (Track 2)—using computational models. The brain activity is in response to viewing sets of images; for each image set, fMRI and MEG data are collected from the same 15 human subjects. Participants can choose to play in Track 1 (fMRI), Track 2 (MEG), or both.

Underlying object recognition is a hierarchical

processing cascade (called the “ventral visual stream”) in which neural activity

unfolds in space and time. At the beginning of the hierarchy, there is a region

called the early visual cortex (EVC), shown in red in the figure.

Neurons in this area respond to lines or edges with specific orientations. As EVC

is early in the processing cascade, it responds early in time.

Later in this hierarchy there is a region called the inferior temporal cortex (IT), shown in yellow. Neurons in this region have been found to respond to images of objects.

In the 2019 edition of the Algonauts challenge, the target is to explain brain activity in two segments of the visual processing cascade. The brain regions to explain are EVC and IT for Track 1, and two intervals in time (early interval around the peak of response in EVC and later interval around the peak of response in IT, with respect to when an image was shown to human subjects) for Track 2.

Given a set of images consisting of everyday objects and corresponding brain activity recorded while human subjects viewed those images, participants will devise computational models which predict brain activity, which will be used to predict the brain activity for a brand new set of images.

The goal of Track 1 (fMRI) is to construct models that best predict activity in 2 regions of the brain, early (EVC) and late (IT) in the human visual hierarchy. Participants submit their model responses to a test image set which we will compare against held-out fMRI data. The representational similarity analysis approach (a technique that maps models and fMRI data to a common similarity space to enable comparison) will be used to score your submissions.

We provide the following data (available for download here):

Learn more and participate in Track 1 here

| EVC | IT | Score | |||

|---|---|---|---|---|---|

| Rank | Team Name | Noise Normalized R2 (%) | Noise Normalized R2 (%) | Average Noise Normalized R2 (%) | |

| Noise Ceiling | 100 | 100 | 100 | ||

| {{$index+1}} | {{row.Team_Name}} | {{row.EVC_pc.toFixed(4)}} | {{row.IT_pc.toFixed(4)}} | {{row.score_pc.toFixed(4)}} | Detailed Results |

The three main columns are EVC and IT (the two brain regions of interest), and Score (average of EVC and IT). The entry with the highest Score is ranked first. R2 represents the submitted model RDM squared correlation (spearman) to the EVC/IT RDM. Noise Normalized represents the fact that R2 values are normalized to the noise ceiling (see here for more details). AlexNet is the organizer baseline.

| EVC | IT | Score | |||

|---|---|---|---|---|---|

| Rank | Team Name | Noise Normalized R2 (%) | Noise Normalized R2 (%) | Average Noise Normalized R2 (%) | |

| Noise Ceiling | 100 | 100 | 100 | ||

| {{$index+1}} | {{row.Team_Name}} | {{row.EVC_pc.toFixed(4)}} | {{row.IT_pc.toFixed(4)}} | {{row.score_pc.toFixed(4)}} | Detailed Results |

The three main columns are EVC and IT (the two brain regions of interest), and Score (average of EVC and IT). The entry with the highest Score is ranked first. R2 represents the submitted model RDM squared correlation (spearman) to the EVC/IT RDM. Noise Normalized represents the fact that R2 values are normalized to the noise ceiling (see here for more details). AlexNet is the organizer baseline.

The goal of Track 2 (MEG) is to construct models that best predict brain data from 2 time intervals, early and late stages of visual processing with respect to onset of an image. Participants submit their model responses to a test image set which we will compare against held-out MEG data. The representational similarity analysis approach (a technique that maps models and MEG data to a common similarity space to enable comparison) will be used to score your submissions. Time intervals of 20ms are defined based on the peak latency of MEG/fMRI fusion time series (0–200ms) in EVC and IT, and are shown with the corresponding image sets below (see Cichy et al. 2014, 2016; Mohsenzadeh et al. 2019 for MEG/fMRI fusion method).

We provide the following data (available for download here):

Learn more and participate in Track 2 here

| Early Interval | Late Interval | Score | |||

|---|---|---|---|---|---|

| Rank | Team Name | Noise Normalized R2 (%) | Noise Normalized R2 (%) | Average Noise Normalized R2 (%) | |

| Noise Ceiling | 100 | 100 | 100 | ||

| {{$index+1}} | {{row.Team_Name}} | {{row.early_pc.toFixed(4)}} | {{row.late_pc.toFixed(4)}} | {{row.score_pc.toFixed(4)}} | Detailed Results |

The three main columns are Early and Late Interval (the two time intervals of interest), and Score (average of Early and Late). The entry with the highest Score is ranked first. R2 represents the submitted model RDM squared correlation (spearman) to the Early/Late Interval RDM. Noise Normalized represents the fact that R2 values are normalized to the noise ceiling (see here for more details). AlexNet is the organizer baseline.

| Early Interval | Late Interval | Score | |||

|---|---|---|---|---|---|

| Rank | Team Name | Noise Normalized R2 (%) | Noise Normalized R2 (%) | Average Noise Normalized R2 (%) | |

| Noise Ceiling | 100 | 100 | 100 | ||

| {{$index+1}} | {{row.Team_Name}} | {{row.early_pc.toFixed(4)}} | {{row.late_pc.toFixed(4)}} | {{row.score_pc.toFixed(4)}} | Detailed Results |

The three main columns are Early and Late Interval (the two time intervals of interest), and Score (average of Early and Late). The entry with the highest Score is ranked first. R2 represents the submitted model RDM squared correlation (spearman) to the Early/Late Interval RDM. Noise Normalized represents the fact that R2 values are normalized to the noise ceiling (see here for more details). AlexNet is the organizer baseline.

Current non-invasive techniques to measure brain

activity resolve the brain either well in space or in time, but not both. We provide

measurements of brain activity while humans viewed a set of images from two techniques:

(1) functional magnetic resonance imaging (fMRI) for millimeter spatial resolution and

(2) magnetoencephalography (MEG) for millisecond temporal resolution. There is a

challenge track for explaining brain data in space (fMRI) and in time (MEG)

respectively.

Click here to learn more about fMRI and MEG

To compare computational models to human brains we use representational similarity

analysis (RSA). The idea is that if models and brains are similar, then they treat the

same images as similar or dissimilar. Using RSA allows us to compare human brains and

models at the relevant level of representations in spite of the numerous differences

between them (e.g. in-silico vs. biological).

Click here to learn about RSA and the comparison metric

You can submit your model out of the box without any further training. However, we

provide training brain data that can help optimize your model for predicting brain data.

Download the training data, test images, and development kit for the Algonauts Project 2019 here

The training data consist of brain activity from both fMRI and MEG in response to viewing images from two sets—the 92 and 118 sets. For each image set, the fMRI and MEG data are collected from the same 15 human subjects. For fMRI, the data provided is based on correlation distances for two brain regions, EVC and IT and for MEG it is based on correlation distances in two time intervals (one corresponding to early brain responses associated with EVC and the other for later brain responses associated with IT).

For the challenge, we provide the 78 test images only. For all test data released post-challenge, please visit the Download page.

Sample Python and MATLAB code for generating activations from AlexNet/VGG/ResNet, computing RDMs from these networks, and evaluating model RDMs against human brain data RDMs.

1. Participants can use any external data for model building. Participants that use the test image set for training will be disqualified (including brain data generated using the test image set).

2. Each participant (single researchers or team) can make 10 submissions per day per track for a maximum of 250 submissions.

3. Participants should be ready to upload a short report (up to 4 pages) describing their model building process for their best model to a preprint server (e.g biorxiv or arxiv) or send a PDF by email to algonauts.mit@gmail.com. If you participated in the challenge, use this form to submit the challenge report. Only teams that submit a report via the above form will be considered for the challenge participation. Submission deadline is July 1, 2019 at 11:59pm (UTC-4).

4. PRIZES FOR WINNERS: top 1 and top 2 entries for each track will receive a travel reimbursement for one attendee to present their method at the Algonauts Workshop at MIT on July 19-20, 2019. Top 1 entries for each track will receive a gift.

Please refer to this page for guidance on citation if you have used any data associated with the Algonauts Project 2019.

We will provide a more extensive paper including the results of the challenge at a later time point. When published, news will be announced via an update on our homepage.

Note: Teams with best submission results will receive an invitation to participate in the Algonauts Workshop, held at MIT on July 19-20, to give a talk about their method during the workshop.

For submitting the challenge report, use this form.

Only teams that submit a report via the above form will be considered for the challenge participation.