There are different ways to relate brain measurements to computational models. For example, the 2019 edition of the Algonauts Challenge used representational similarity analysis (RSA, Kriegeskorte et al. 2008).

In the 2021 version we leave it up to you to determine the approach to predict brain responses (see Challenge Rules). As an example, we guide the reader through one common approach called a voxel-wise encoding model (Naselaris et al., 2011; Wu et al., 2006) where the response of each voxel is predicted independently using the multiple features provided by a computational model (a regularized linear regression is typically used to form the prediction).

We provide an example implementation of the voxel-wise encoding model using AlexNet as the computational model in the development kit.

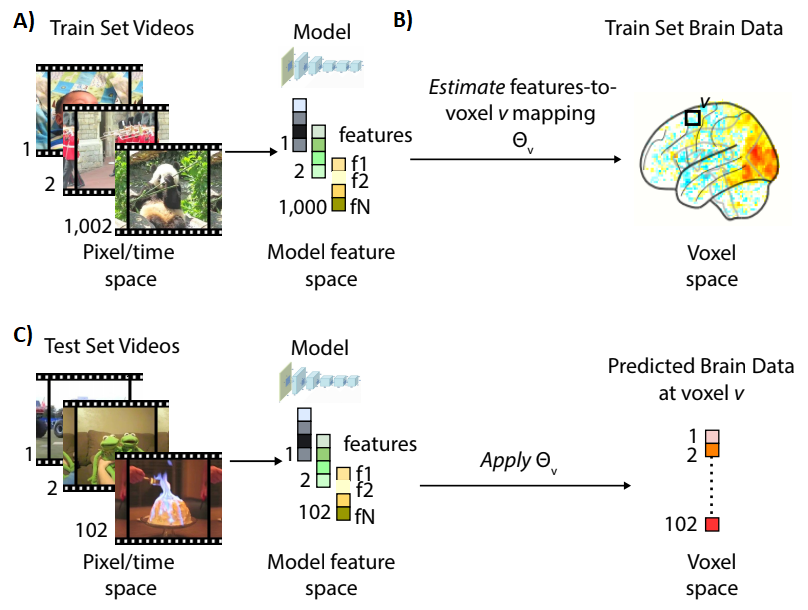

Figure 1: A) First, given the training set videos, the features of a computational model are extracted. B) A mapping between model features and brain voxels is estimated from the Training Set videos. C) A mapping between model features and brain voxels is generated on the test set videos, therefore, generating a predicted voxel v's activity for a given video.

The approach has three steps:

Step 1. Features of a computer vision model to videos are extracted (Fig. 1A). This changes the format of the data (from pixels to model features) and typically reduces the dimensionality of the data. The features of a given model are interpreted as a potential hypothesis about the features that a given brain area might be using to represent the stimulus.

Step 2. Features of the computational model are linearly mapped onto each voxel's responses (Fig. 1B) using the training set provided. This step is necessary as there is not necessarily a one-to-one mapping between voxels and model features. Instead, each voxel's response is hypothesized to correspond to a weighted combination of activations of multiple features of the model.

Testing the model. If the computational model is a suitable model of the brain, the mapped predictions of the encoding model will fit empirically recorded data well. In the challenge we test your predicted brain responses against the held-out brain data responses to videos from the test set. If you want to evaluate your model yourself before you submit, you can do so by dividing the data we provide you further into a training and a testing set. The development kit provides an example of how to do this.

Step 3. The estimated mapping from the training dataset is applied on the model features corresponding to videos in the test set to predict synthetic brain data (Fig. 1C). The predicted synthetic brain data is then compared against the ground-truth left out brain data in the testing set. This ensures that the model fit is cross-validated and thus unbiased. In the context of the challenge we do the comparison for you, as we keep the testing set brain data hidden.